How I let the trees grow

Published on Thursday, March 3, 2011 2:42:00 PM UTC in

Programming

When I was thinking about the Eco Contest 2011 on Silverlight Show, I had the idea to enrich my application with the visual gimmick of dynamically growing trees. I wanted to create both the branches and leaves on the fly randomly and animate them to create that impression. If you have already visited my entry you know how the final result looks like in action, and if not, you can find it here (feel free to leave a vote :-)). Let me show you an example image of a dynamically rendered tree once it has finished animating:

Those trees typically consist of ~20,000 single elements, with ~5,000 for the branches and ~15,000 leaves.

When I showed the app to some friends as work in progress, I was asked how I was able to perform that rendering so well without completely hogging all computer resources; I figured that the steps of optimization that went into this would be an interesting topic for others too, so I decided to write this blog post.

The initial approach

My first attempt to create a proof of concept for this was pretty naive. I thought a bit about the required data structures and visual elements and started to create this like any other application. In particular, I...

- ...used user controls instances for each individual element on the screen.

- ...used data binding to update the visuals with the properties from my data items.

- ...experimented with visual states for the animations.

- ...created all the elements that formed a tree beforehand.

- ...put all these elements in a canvas and started animating them.

What looks like a good idea for simple cases and normal UIs turned out to be "suboptimal" for the massive amounts of visual elements needed for this. Creating the required data structure, visual elements and adding all of them (still collapsed) to the canvas took up to 20 seconds at 90% CPU load on my computer. Animating the tree was even worse. Sometimes I even couldn't close the browser window because it wasn't able to register my mouse clicks anymore. In addition the sample add had an enormous hunger for memory; consumption was way too high. To sum it up: a big failure.

First improvements

This wasn't my first experience with graphics-intensive applications, and I had the feeling that I wouldn't get away with the overhead of user controls by the time I was writing the first iteration already. So naturally my first step was to simplify this and switch to more lightweight elements. In .NET, we have the DrawingVisual class for that which really provides good performance. But there's no support for it in Silverlight, so I decided to go with Paths. The additional consequence was that I also created and set up those elements programmatically now.

This resulted in two effects: a) the initial setup of the necessary elements went down from 20 seconds to approximately 5-6 seconds, and b) the rendering started out more smoothly than before, but went downhill from there quickly.

For the moment, I figured the second point to be more important, as I thought I could rather live with a long setup time than with declining rendering performance. The reason for that decline of course was that the runtime had to handle more and more elements as they became visible. Even worse, the leaves are transparent and overlap a lot, so the amount of blending operations also increased constantly over time.

Limiting the number of parallel animations

The only limitation I had built in from the beginning was that only branches from the same "generation" (branches that belong to the same depth level of the tree) were animated at the same time. For the first few levels that was sufficient, but depending on the (randomized) amount of branches created for the deeper levels, this resulted in thousands of parallel running storyboards for the last levels. Also, all the leaves animations were started at the same time when the branches had completed, which meant even more parallel storyboards.

The solution to this was a queuing mechanism: Start a certain amount of parallel animations and keep track of the running storyboards. When a storyboard finishes, take the next waiting storyboard and start it. In the final application, the maximum number of parallel animations is 300 for the branches and 200 for the leaves. I chose a lower number for the leaves due to the transparency they're using.

This brought some relief especially for the later stages of the rendering process when the deeper level of the tree and the leaves are animated. However, performance still was really bad because over time, simply too many objects that needed to be rendered were visible on the screen at the same time.

From dynamic to static

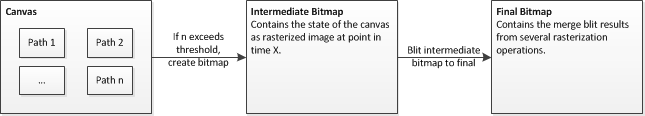

The approach I came up with to solve this problem was to create static images from the canvas content when the amount of elements gets too high and then clear the canvas content to start over. Thankfully the WriteableBitmap class has a constructor that lets you take "screenshots" from Silverlight's UI elements, which is what I do when the path element count exceeds a certain threshold. That intermediate bitmap is then blit to a main bitmap, which contains the merged result from several of those iterations. For the blit operation I am using the WriteableBitmapEx project, a set of extension methods for the writeable bitmap class which also includes an optimized blit method.

Unfortunately the result was not yet satisfying. To understand the problem, you have to remember that I animate the elements. The branches actually grow and the leaves are faded in, and there are always several animations running at the same time. Let's look at an example of what happens:

Let's say my threshold is one hundred finished elements, and the hundredth animation has completed. If I have a total number of elements that is greater than 100, the situation is that my canvas holds 100 completed elements and an additional amount of elements that are in the middle of their animation. This is the result of the rolling queuing mechanism I described above and actually desired behavior to create a continuous flow of appearing elements (as opposed to animating elements in batches). If I created bitmaps from the canvas in these situations, those partially animated elements would be included on it too. For the branches this would result in distortions in the final image (because they actually change shape) and for the leaves in false opacities (because multiple inclusions of the same leaf would result in additive blending).

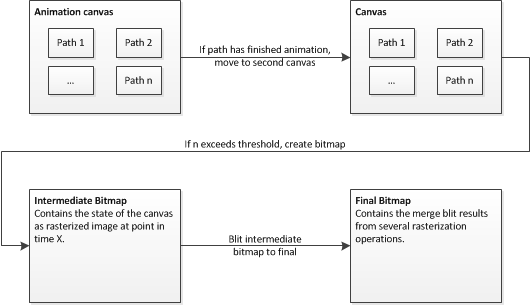

So the construct that can be found in the final application is a bit more complicated and consists of two canvas elements: The first one contains all elements that are currently animated, whereas the second one contains those elements that have finished animating. The transfer is done manually as soon as an element's storyboard has finished. Once the threshold of elements in the second canvas is reached, the same mechanism as I just described is used to create a static image from it. That way this static image is always an exact reproduction of what happens and happened on the canvas(es).

To make this work, all these elements are positioned on top of each other in a custom user control. The final bitmap is the background of that user control, the second canvas is positioned on top of the final bitmap and has a transparent background, and on top of that is the animation canvas, again with a transparent background. This works pretty well if the controls are aligned in the same way (e.g. in a grid) and have the same size.

If you look at the final application you will see that these transitions are not perceptible. The blitting happens every 100 finished elements, and because a tree consists of ~20,000 paths, blitting is performed ~200 times for each tree. Of course this approach has some limitations. If you rely on the z-order of elements for example, you need to render them sorted by their z-index or it won't work. In my case this wasn't an issue though.

After implementing this, smooth rendering was possible for the first time from start to end, without performance degradation.

Object pooling

The only problem left was the very long preparation times. There were actually two problems with that:

- I was still creating all the needed elements before I started rendering, namely a typical number of 20,000 paths. Of course only a fraction of them were actually required for the animations. Each time the blit threshold was reached, all of the paths were removed from the second canvas; and on the animation canvas only the maximum number of parallel animations (as described above in the queuing mechanism paragraph) were actually visible and required.

- In between successive renderings of multiple trees, I threw away all the 20,000 paths and recreated them from scratch. Not very optimized!

At first both problems seemed solvable easily. I just had to create the required elements on the fly instead of pre-create all of them at once. The required changes were implemented quickly, however, after some profiling I figured that quite a share of the time still was spent with the creation of these objects. I did not need to create a lot of them at once anymore, yet still 20,000 of them were created and thrown away for each tree. What required 5 seconds computation time in the beginning until that point, was now simply distributed over the course of the rendering of a whole tree.

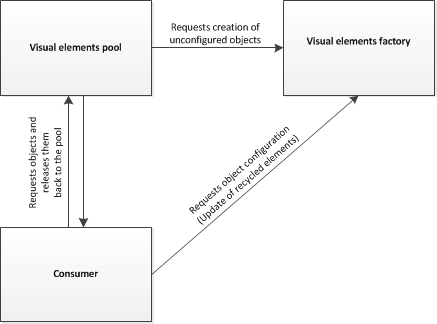

That lead me to the idea to use object pooling for the paths and storyboards. In the final application, this construct actually consists of two classes. There's the object pool itself that does nothing but maintain the available objects and those in use, and a second factory that is able to create and update the required path and storyboard elements based on the properties from my model items.

The object pool works in the usual way that the number of objects is increased by a certain amount when the pool runs out of available objects, and objects that are no longer required are returned to the pool and recycled for the next requests.

Note: My situation might not seem like the textbook scenario for object pooling at first, but since my numbers are actually pretty static, I experienced quite a boost by introducing it. The total number of objects the pool needs to create always is the maximum possible number of currently animating elements plus the threshold of finished objects that have not been rasterized yet. Also, all those objects are constantly required through the whole life-time of the application as the rendering is looped and performed all the time. So in my case, the benefit ended up as being the difference between creating ~1,000 objects once and reuse them all the time, and creating a total of 20,000 dynamically for each tree, which had quite an impact on lowering average CPU load in the long run.

And that's it?

Yes! I was playing with some more ideas to improve performance, but none of them paid off in the end. It's particularly noteworthy that GPU acceleration did not result in any performance gains. In fact, for one of the testers it even decreased performance.

The animations as you can see them in the application are mostly a result of fine-tuning the above settings. I tried to find a balance between the number of total elements, the number of parallel animations, the threshold for blitting and other details so the application runs fine on a mid-range computer. On my ultra-portable laptop with a ultra-mobile processor that only runs with 1.06 GHz the CPU load never exceeds 30 percent. Average load is distinctly lower. I'm also pleased with memory consumption, which usually should be around 70-100 MiB.

I'm thinking about releasing the whole source of the contest entry. If you are interested in it or want to encourage me to do so, let me know.

Thanks for reading!

Tags: Eco Contest · Optimization · Silverlight