Chronicles of a Failure

Published on Friday, September 27, 2013 3:00:00 AM UTC in

Announcements

& Philosophical

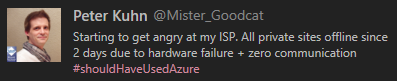

Some people need to be taught the hard way. Once again these days, I was one of them. As some of you may have noticed, strange things happened to my blog and several others of my sites recently. From being completely offline through returning 500s to posts that disappear and come back randomly. Hopefully by now everything is back to normal, as you are currently reading the redesigned blog on my new domain www.mistergoodcat.com. So what happened?

Worst Case Scenario

I've been a customer of a rather small, but longstanding German ISP for several years. I won't tell any names, but those of you who are interested enough should easily know how and where to get that information. I was quite satisfied with their service, and in contrast to some of the big players I was using before it always provided that cozy feeling – you know, like when you call support after hours or on the weekend you were to talk to the boss himself, with child's laughter in the background, instead of having to deal with a random, anonymous call center agent. I don't say it was perfect, we had some ups and downs over the years, but all in all I was satisfied, and honestly, it was just too convenient leaving everything as-is even though I had a TODO of moving everything to Azure on my list for at least a year.

As you may guess already, I did regret that procrastination a lot during the last days.

It all started last Wednesday when I noticed that e-mails were not coming through. What I though of as only a short hiccup got my immediate attention when I realized that some web sites were timing out also. Sure enough, shortly later I received an email telling me that due to a hardware failure my account was completely offline: no web sites, no emails, nothing. The good news however was that the next morning a technician would replace the parts in question and everything should be back to normal then.

Playing Dead

Friday came and went without any further updates. I tried to ping my sites every now and then, and at some point empty IIS landing pages started to show up, but it didn't go anywhere from there, so I contacted support again on Friday afternoon – but got no response.

On Saturday some of my own landing pages seemed to be working again, but not all of them. It also seemed that web applications in sub folders did not work at all (including my blog), and anything that required a database also simply threw errors. Since I couldn't log on to any of the backend services or even FTP, I pinged support again – and sure enough didn't get any reply.

On Monday – still no changes and everything far from being back to normal – I opened another support ticket and now got a response very quickly. Unfortunately, it wasn't exactly what I wanted to hear.

Drawing the Balance

In detail, the results of that hardware defect were the following:

- All email accounts, including the contents of those mailboxes, were lost and couldn't be restored. To prevent further loss of emails, catch-all accounts had been created for all domains.

- All SQL Server databases were lost and couldn't be restored.

- All configuration of web applications and server settings were lost and couldn't be restored; this included user accounts for FTP, custom MIME-types and other IIS settings.

- Regarding the file system contents, a backup could successfully be restored.

Apparently I was supposed to receive newly created login credentials for the backend on Friday already, but for some reason they had never reached me (nor my spam filter by the way).

I was shocked.

Now you may argue that this is what you get if you're willing to only pay a few hundred bucks a year for a full-blown web appearance. But wait, it gets better than that and to a point where reasoning is not indicated anymore:

When I tried to get some impressions of what would be necessary to restore everything, I first realized that no catch-all email accounts were created after all. There was only one single mail account for one of the several domains, and it didn't have the catch-all flag set. Furthermore, I wasn't able to create any email accounts and ran into several error messages in the backend when I tried. Next thing was to take a look at the restored files; I realized that the backup the provider had used was from April 2012. Yes, you read that right. 17 months old. This is 2013, and no matter how cheap you are, doing backups like every two years or so is simply preposterous. And of course the question stands if 400+$ per year really is to be considered a low budget offer that you probably should expect something like that from.

I tried to contact support about this again, also asking if there would be other ways of restoring a newer backup or at least the databases. Sometimes, not receiving a response says it all, does it?

Paranoia to the Rescue

Luckily for me, I do not trust anyone™. I do manual backups of everything regularly, and then I make backups of these backups and store them elsewhere, in case Gozilla decides to stomp down our house. Unfortunately my last backup of the sites still were some weeks old, which meant that I had lost approximately one month of data. For most parts however, you can get back your data from other places if you are clever (no, I'm not talking about the NSA) – first I dug up some posts in Google's cache, then I realized that Windows Live Writer provides you with the last local copy of posts even when it's not able to retrieve the online version – thank you, Microsoft! That meant that I could recover all blog posts, for example, even though the comments on these posts were lost.

So all in all it didn't look too bad, but of course it still was an enormous amount of work ahead. We're talking not only about uploading literally gigabytes of data like screencasts, but also many things that couldn't go unattended, like restoring configurations and setting up email accounts for everybody again etc. The final question hence was whether I should take the risk, set up everything again and wait for the next disaster – or…? Well, of course I finally took the opportunity to get back to that TODO list of mine and move some things to Azure – which I will blog about in more detail shortly.

A Conclusion

The most important thing to take from this for me personally is not that you shouldn't work with small companies, and it's not that I should blame my ISP for that (even though some of their actions and policies are more than questionable). The failure's on me, because I made myself dependent on one single entity, which is a very dangerous thing to do. Out of laziness I had enjoyed a full-service offer for years, until it came down crushingly and fast: domain maintenance and DNS, hosting web applications and static content, databases, email services and more all came from one single provider, and as it turned out, most of it even from one single physical machine (doh!), which resulted in the worst possible outcome last week. That should teach me, and you too, to separate concerns not only when you're working on your code :) but also in other areas – more on that soon.

-Peter

Update (2013-10-04): In the meantime, the ISP responded with a generic apology sent out to all affected customers, claiming that they had newer backups which however couldn't be restored because they turned out to be faulty. As a compensation, those customers will receive a free month as credit with their next invoice (which would be some time in 2014 in my case).

Tags: Disaster Recovery · WTF